The ICO publishes long-awaited content moderation guidance, coinciding with the end of Ofcom's illegal harms consultation

On 16 February 2024 the ICO published guidance on the application of data protection law to content moderation activities (the "Guidance"), following a call for views last year. The topic is increasingly under regulatory scrutiny in the context of evolving online safety regulations, including the UK's Online Safety Act ("OSA") which gained Royal Assent on 26 October last year; though the Guidance also applies beyond the scope of activities required by the OSA. We have summarised key takeaways for businesses from the Guidance in this article.

Overall, the Guidance is helpful in clarifying the ICO's pragmatic position as compared to the EU in some areas. However, some gaps and questions remain (which we have highlighted below.

The Guidance in the context of the UK's online safety regime

Relationship with the OSA

The Guidance is firstly relevant for businesses using content moderation to comply with their duties under the OSA. The Guidance focuses on moderation on user-to-user ("U2U") services which allow user-generated content ("UGC", defined in the Guidance by reference to the OSA) to be uploaded/generated and encountered by other users on their service. The ICO defines content moderation (which is not defined in the OSA) as "the analysis of user-generated content to assess whether it meets certain standards; and any action a service takes as a result of this analysis."

U2U services' OSA obligations that require content moderation include duties to use proportionate measures to prevent users from encountering priority (the most serious) illegal content (which may require an element of proactivity depending on the service's risk assessment), and proportionate systems and processes to swiftly remove illegal content after becoming aware of its presence. Further duties (including preventative duties) relating to legal but harmful content apply to U2U services likely to be accessed by children, and the largest/riskiest "Category 1" U2U services.

Ofcom closed its consultation on illegal harms on 23 February 2024, so only a short window after the ICO's guidance was published. Under the OSA U2U services have a "cross-cutting duty" to have particular regard to privacy, and the Ofcom consultation refers to this throughout (though at a high level). U2U services should therefore have regard to the Guidance when complying with the OSA's cross-cutting duty, in addition to their own data protection duties under the UK GDPR. Otherwise however the Guidance refers U2U services to Ofcom's guidance for compliance with their OSA duties.

Beyond the OSA

The Guidance is also applicable to businesses who are using content moderation other than in connection with OSA duties. This will be relevant for example to businesses not directly subject to OSA but nevertheless conducting moderation (for example hosting services without a U2U element, or U2U services falling into one of the OSA's exemptions); or businesses subject to OSA conducting additional moderation e.g. in connection with enforcement of their Terms of Service ("ToS"). These types of moderation will often be a commercial decision or in response to public relations concerns and/or stakeholder pressure.

Lastly, the Guidance also applies to organisations providing content moderation services – this will often be as a processor though the ICO has flagged that determination of processing roles in this area can be complex. This may relate to companies providing AI content moderation technologies or outsourced human moderation, for example.

What personal data is processed during content moderation?

The ICO confirms that where the UGC itself is processed in a content moderation context, this is likely to be personal data because it is either obviously about someone, or is connected to someone making them identifiable (e.g. the user whose account posted the UGC).

Other personal data may also be processed however, for example contextual information used to make content moderation decisions like age, location and previous activity. This is connected to s.192 OSA which says organisations should assess "reasonably available" information when determining whether a piece of content is illegal. Businesses will need to comply with data protection law regarding this information too – regardless of whether it has been separated from the information about the user account during the moderation process, since this will only render it pseudonymised rather than anonymised (though is a helpful security measure).

Furthermore, once an action has been decided on, the ICO confirms this "generated" information will also constitute the relevant user's personal data.

Compliance with the DP principles

The Guidance issues recommendations for compliance with the data protection principles when using content moderation as a controller.

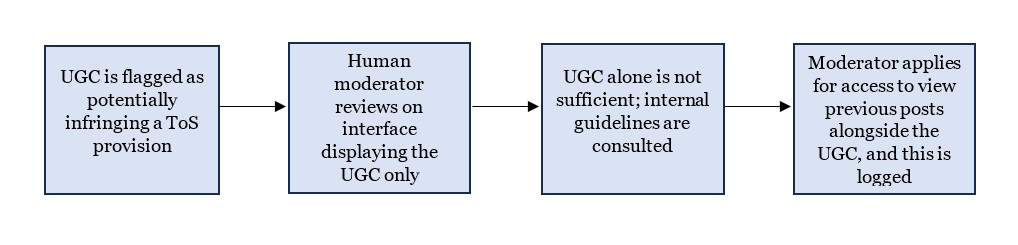

- Data minimisation – the ICO says that in many cases it should be possible to make accurate decisions based solely on the UGC in question. Where contextual information must be reviewed this should be subject to safeguards. An example flow is as follows:

- Storage limitation – personal data used in content moderation should not be retained for longer than necessary. There is a tension between the need to consider "reasonably available" contextual information (especially to avoid over-removal and infringement of freedom of expression) and not arbitrarily retaining information. Businesses should also consider the need to retain information in the context of complaints mechanisms (which under the OSA are mandatory in some circumstances and which for businesses subject to the EU’s Digital Services Act would also need to be retained for the 6 month period during which a complaint could be brought). Data minimisation measures may assist here, for example deleting full transcripts of reported content but retaining violation "flags" to the extent necessary, or retaining content only in the context of a live appeals process.

The ICO also reminds businesses to comply in particular with the fairness, accuracy (particularly where AI is deployed which is at risk of bias/discrimination), purpose limitation and security principles.

Which lawful basis will apply for content moderation?

The ICO says the "most likely" legal bases under Article 6 UK GDPR will be legal obligation or legitimate interests.

- Legal obligation – the ICO confirms that this can include processing for compliance with OSA duties, so long as it is necessary and proportionate; and will "likely" also apply to measures in Ofcom's Codes of Practice given compliance with the Codes is an indicator of compliance with the OSA. Interestingly the ICO says it is possible to rely on legal obligation for moderation in order to enforce ToS, which is an "alternative" compliance approach suggested by Ofcom under its draft illegal content judgements guidance – despite this potentially meaning U2U services can moderate a broader range of content than if they were making narrower judgements solely around illegality of content as prescribed under the OSA.

The ICO says businesses should document the decision to rely on legal obligation and identify the part of legislation linked to the processing, which will create compliance paperwork.

- Legitimate interests ("LI") – the ICO helpfully confirms reliance on this is "likely" to be possible where organisations are enforcing their ToS, provided the balancing test is met; though somewhat less helpfully, says this test requires businesses to show the "compelling benefit" of the interest where there is an impact on data subjects which is more akin to the "compelling legitimate grounds" requirement under Art 21(1) UK GDPR (right to object) than existing guidance on LI . LI can be considered where children are involved but businesses must "take extra care to protect their interests".

Contractual necessity may be possible where necessary to enforce ToS but the ICO says legal obligation/LI are likely to be more suitable; and the fact children may not have capacity to enter into the relevant ToS must be considered. The ICO also says it is not possible to rely on contract where processing is for service improvement of content moderation systems . This is in line with the EU's increasingly restrictive interpretation of contractual necessary.

Special category data and the OSA "gap"

Businesses must assess whether special category data ("SCD") may be processed. Given the nature of UGC that might be moderated (for example indicating political affiliation, or users' own eating disorder or mental health-related medical conditions) we think this is likely to be relevant for many U2U services.

The Guidance says SCD will be processed where UGC directly expresses SCD, or where SCD is intentionally inferred from the UGC. It will not however be processed where a moderator "may be able to surmise" SCD is present but does not have a purpose of inferring this, nor intend to treat people differently based on such inference. This is consistent with the ICO's narrower interpretation of SCD as compared to the EU.

The ICO suggests the most appropriate Article 9 condition for processing will be substantial public interest, citing the Part 2 Schedule 1 Data Protection Act 2018 ("DPA") conditions of (i) preventing or detecting unlawful acts, (ii) safeguarding of children and individuals at risk, or (iii) regulatory requirements (the latter applicable where establishing whether someone has committed an unlawful act or has been involved in dishonesty, malpractice or other seriously improper conduct). Businesses should note that all of these require an "appropriate policy document" to be in place (though not for (i) where disclosing data to the relevant authorities).

However we believe there is still a gap against the following OSA obligations:

- For all U2U services, enforcement based on ToS that go broader than prohibiting illegal content (which as above, Ofcom has suggested as an "alternative" way to comply with illegal content duties). For example ToS may prohibit "all bikini photographs" on a service; it will be difficult to argue in this context that such processing has a purpose of preventing unlawful acts, and it does not fit into the other conditions.

- For Category 1 services, "adult user empowerment duties", which require services to implement features enabling adults to increase control over the legal but harmful content they see. This would not fit into the above conditions either.

The ICO has not dealt with this gap so this remains an area of uncertainty.

Criminal offence information

Similarly, businesses must assess whether criminal offence information will be processed which also requires an authorisation in domestic law. This includes information "relating to" criminal convictions or offences, which the ICO confirms can include suspicions or allegations of offences.

Organisations may have a argument in some circumstances that information does not reach this threshold, such as where they are enforcing based on breach of ToS rather than making illegal content judgements; there are other scenarios however where the threshold seems particularly likely to be reached, such as where child sexual abuse material ("CSAM") is reported to the NCA. Assessment should be on a case-by-case basis.

The ICO refers to the same conditions for authorisation under domestic law as with SCD, satisfaction of which (in particular "prevention or detection of unlawful acts") seems more likely in this context.

AI tools and automated decision making

Many businesses will outsource moderation to third party technology providers, some of whom will deploy AI. The ICO says that:

- "Solely automated decision-making" will occur for example where an AI tool classifies and takes action on content without a human involved, or determines whether or not different images may be similar.

However it will not occur in the case of non-perceptual hash/URL matching where matches are based on specific, pre-defined parameters representing things humans have already decided upon. This is more pragmatic than EU guidance on this point (see for example the Article 29 Working Party's example of imposing a speeding fine purely on the basis of evidence from speed cameras) . It is helpful for those using matching tools for fraud prevention, provided a human has set the parameters of those databases.

- Further, such decision could have a "legal or similarly significant effect" (bringing it within scope of Art 22 UK GDPR) where it impacts a user's financial circumstances (such as a content creator whose posts are removed). The ICO does not consider broader examples where the legal effect is an impact on freedom of expression; this could feasibly lead to engagement of Art 22 as well. Decisions will need to be case-by-case and the ICO suggests a "mechanism" is implemented to determine when Art 22 is engaged, though it will be challenging for businesses to implement this for bulk decision-making.

It is also unclear when providers may rely on the Art 22 exceptions. For authorisation by domestic law, the ICO refers to the OSA and Codes of Practice but does not confirm whether this law "contains suitable measures to safeguard a user's rights, freedoms and legitimate interests" which is a pre-condition of its use. The ICO leaves open the possibility of relying on contractual necessity where enforcing the ToS where is does not need to be "absolutely essential" but must be "more than just useful" (which appears to contradict its more restrictive language in the legal basis section, and again diverges from the EU approach).

The Guidance outlines other considerations where content moderation activities are outsourced – for example, complexities in determining processing roles and the importance of regular review and audit. Where providers are using personal data for "other" purposes such as product development are improvement, the ICO says the main service "should confirm that the provider would be acting as a controller", and consider how information about this processing will be communicated to end users – this will bring up concerns around purpose limitation, and for providers around the extent of their own UK GDPR compliance obligations.

Exclusions from the Guidance

Areas are explicitly excluded from the Guidance including behavioural profiling and reporting CSAM to the National Crime Agency (which the ICO will provide guidance on in the future); or on-device moderation which is covered by the Privacy and Electronic Communications (EC Directive) Regulations 2003 ("PECR").

Next steps and upcoming legislative changes

The Guidance was prepared based on existing data protection legislation in the UK. The UK Government is in the process of updating the regime via the postponed Data Protection and Digital Information ("DPDI") Bill which now has a tentative timeline for Royal Assent of mid-2024, and which may well impact rules around Art 22 automated decision making and will perhaps be able to cover some of the SCD-OSA "gaps" (though it does not currently).

Organisations in-scope of the OSA should also keep an eye on Ofcom's Codes of Practice, with the illegal harms Codes of Practice due to come into force late 2024 and others due to be introduced on a phased basis.

Contact Bird & Bird's privacy and online safety teams if you have any questions (including if you are not sure whether the OSA applies to you).