Singapore Introduces New Model AI Governance Framework for Agentic AI

Key Takeaways:

- On 22 January 2026, the Model AI Governance Framework for Agentic AI ("MGF"), developed by Singapore's Infocomm Media Development Authority ("IMDA"), was launched.

- Agentic AI refers to AI agents that can independently plan, reason, and take autonomous actions to achieve objectives on behalf of users.

- Compliance with the MGF is voluntary, though organisations remain legally accountable for their agents' behaviours and actions. The MGF applies to all organisations deploying agentic AI in Singapore, whether or not training/developing in-house AI agents, or using third party agents.

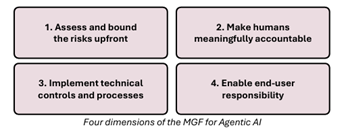

- Broadly, the MGF sets out key risks in deploying agentic AI systems, and provides guidance across four dimensions that organisations should consider when deploying agentic AI systems.

- The risk areas highlighted in the MGF would be particularly relevant for organisations which may access and process data of a more sensitive nature, such as financial institutions and healthcare providers and their downstream or back-end service providers, or tech companies or developers which may access a broad range of data for algorithmic training purposes.

- The MGF is currently open for public feedback and submission of case studies demonstrating good practices in risk bounding, human accountability allocation, technical controls implementation, safety testing, monitoring mechanisms, and end-user training.

Background:

On 22 January 2026, Minister for Digital Development and Information, Mrs Josephine Teo, announced the launch of the Model AI Governance Framework for Agentic AI ("MGF"), developed by Singapore's Infocomm Media Development Authority ("IMDA"), at the World Economic Forum 2026.

This marks the world's first governance framework specifically designed to address agentic AI systems, which refer to AI agents that can independently plan, reason, and take autonomous actions to achieve objectives on behalf of users.

The MGF builds on to Singapore’s existing slate of non-binding, best-practice AI-related frameworks and guidelines, such as:

- the Proposed Guidelines on Artificial Intelligence Risk Management (see our article here), which applies to financial institutions and sets out the regulatory expectations in managing risks arising from the use of AI, including generative AI and agentic AI;

- the Model AI Governance Framework published by the IMDA in 2019 in collaboration with the AI Verify Foundation and accompanying Implementation and Self Assessment Guide for Organizations (ISAGO);

- IMDA’s AI Verify, which is also an AI governance testing framework for companies to assess the responsible implementation of their AI systems;

- the Global AI Assurance Pilot, also launched by the IMDA and AI Verify Foundation in February 2025, which builds on AI Verify and codifies emerging norms and best practices around technical testing; and

- the Advisory Guidelines on use of Personal Data in AI Recommendation and Decision Systems (see our article here), published by the Personal Data Protection Commission in 2024, and which provides guidance on using personal data to develop and deploy AI systems.

However, a key distinction of the MGF is that it focuses on addressing the challenges and risks of using increasingly autonomous AI tools that do more than just generate content, including unauthorised actions, data breaches, biased decision-making, and disruption to connected systems.

What is Agentic AI and What Does the MGF Address?

Unlike generative AI, agentic AI systems can take autonomous action, plan across multiple steps and interact with other agents and systems to complete tasks without constant human intervention. Some common applications include functions such as searching databases, updating records and processing payments, though the realm of potential use cases is practically limitless as organisations discover new ways to leverage such technology across different industries.

However, the autonomous nature of agentic AI presents a host of unique additional risks. For instance, the processing of sensitive data and making of autonomous decisions based on such processing is a huge risk factor given the potential for harms including erroneous or unauthorised medical or financial actions, data breaches exposing confidential information, biased outcomes affecting individuals, and cascading failures when multiple agents interact unpredictably.

To manage these risks, the MGF provides guidance across four key dimensions that organisations should consider when deploying agentic AI systems.

1. Assess and Bound the Risks Upfront

Organisations should conduct rigorous risk assessments before deploying agents, considering factors such as domain sensitivity, exposure to sensitive data and linkages with external systems and external data, the scope and reversibility of actions taken by the agent, the level of autonomy granted to the agent and the complexity of the task.

Organisations should also seek to bound the risks through design by implementing access restrictions for the agent, limiting autonomy, establishing traceability to human users, and conducting threat modelling.

2. Make Humans Meaningfully Accountable

The MGF emphasises that human accountability remains paramount. Organisations should implement clear responsibility allocation across different involved teams and implement clear contractual accountability provisions when engaging with external parties. Human accountability should also be built in via defined checkpoints requiring human approval, as well as combating “automation bias” through trainings and regular audits of the effectiveness of human oversight. Lastly, the MGF recommends real-time monitoring and escalation of unexpected agent behaviour.

3. Implement Technical Controls and Processes

Organisations should implement controls across the AI lifecycle. For instance, during development of the agent, the agent should be prompted to reflect on and log their plans for evaluation, and the organisation should limit the agent’s access to only what is necessary, and run everything in secure, controlled environments. Before deployment of the agent, the organisation should test the agent thoroughly for accuracy and reliability even in edge cases, and ensure that both individual agents and multi-agent systems work properly in realistic environments. Lastly, when deploying the agent, such deployment should be on a gradual basis based on risk level, and the agent should be continuously monitored (with the use of alert thresholds and failsafe mechanisms).

4. Enable End-User Responsibility

Organisations must provide sufficient information to enable end users to use agents responsibly. The MGF distinguishes between two user types requiring different approaches – namely users interacting with agents (e.g., customers, end-users), and users integrating agents into processes (e.g., employees). For the first type of users, it is particularly important to inform and notify them of the use and limitations of agentic AI tools, and to provide a human touchpoint for escalation. For the second type of users, the focus should instead be on training and education, to empower the users to use agentic AI tools wisely and accurately.

Key Takeaways / Next Steps

Organisations deploying or considering agentic AI should review current or planned deployments against the MGF’s risk factors and governance dimensions, assess gaps between existing practices and the framework's recommendations, and update policies and training programmes to embed the framework's principles. Interested parties may also consider submitting feedback on the MGF or case studies to the IMDA (noting that contributors will be acknowledged in updated versions of the MGF).

This article is produced by our Singapore office, Bird & Bird ATMD LLP. It does not constitute legal advice and is intended to provide general information only. Information in this article is accurate as of 23 January 2026.